Key Takeaways

- During the 2025 proxy season, nearly 40% of European large-cap companies either had a formal artificial intelligence policy or discussed oversight of AI in their annual report.

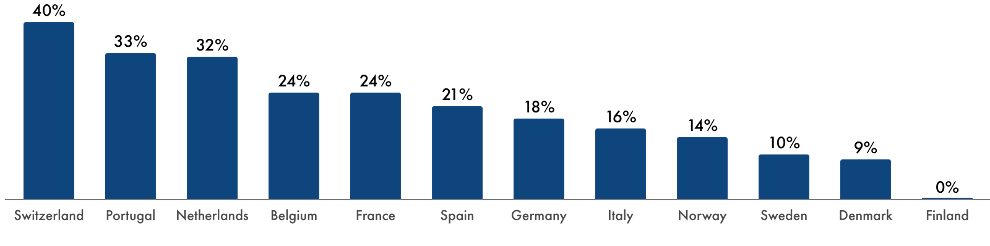

- Formal AI policies were most common among companies in Switzerland, Portugal and the Netherlands; and least common in Nordic markets.

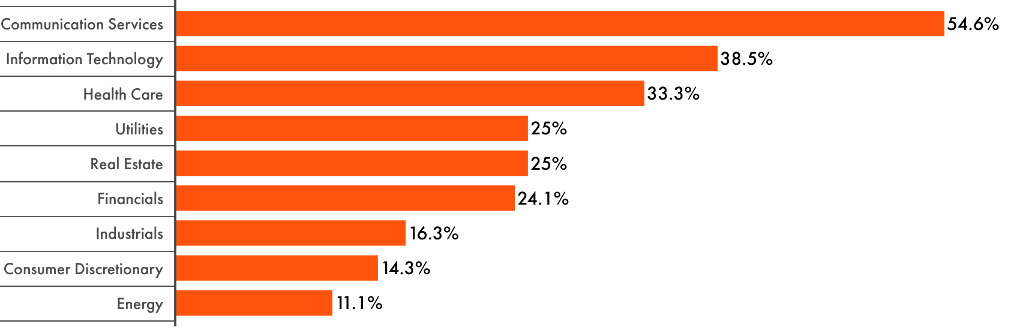

- More than half (54.6%) of companies in the communications sector have a formal AI policy, significantly higher than the next highest sector, information technology (38.5%).

- While EU regulatory requirements are still being finalized and phased in, investor expectations for board oversight of AI are growing.

In recent years, companies have rapidly begun to develop and adopt applications of artificial intelligence (AI) technologies throughout various aspects of their operations. Deployed and overseen effectively, AI technologies have the potential to make companies’ operations and systems more efficient and productive. However, as the use of these technologies has grown, so have the potential risks associated with companies’ development and use of AI.

These potential risks underline the need for boards to be cognizant of, and take steps to mitigate exposure to, any material risks that could arise from their use or development of AI. This article examines AI governance trends among Continental European public companies in 2025,1 with discussion of the regulatory landscape and evolving investor expectations.

Formal AI Oversight Is Becoming More Common

The 2025 proxy season marked the emergence of artificial intelligence governance as an area of board oversight in European markets. While only 20.7% of large-cap companies across Europe had formal AI policies in place, a further 18% referenced AI risks or oversight in annual reports (Figure 1).2 The majority (61.3%) had no AI policy disclosed.

Figure 1. AI Policies and Oversight at Large-Cap European Companies

Source: Glass Lewis Research. Note: Data as of 2025 proxy season period from Jan. 1 to June 30, 2025.

Among European markets, Switzerland appears to lead in AI policy adoption, with 40% of large-cap companies having policies in place, followed by Portugal and the Netherlands. At the other end of the spectrum, Nordic markets lagged the rest of the continent. While companies like Novo Nordisk, Hexagon, and Aker have been proactive in defining their approach to AI, overall adoption rates were low among Finnish (0%), Danish (9%), Swedish (10%) and Norwegian (14%) large-cap companies (Figure 2).

Figure 2. Prevalence of Company AI Policies in Europe by Country

Source: Glass Lewis Research. Note: Data as of 2025 proxy season period from Jan. 1 to June 30, 2025.

Additionally, several companies have announced commitments to implement AI governance structures or policies in the near future. UniCredit, for instance, disclosed its intention to establish a governance framework for responsible use of AI by the end of fiscal year 2025,3 and Poste Italiane committed to introducing an AI policy by 2026.4 Furthermore, in early 2025, E.ON confirmed that its management and works councils had agreed on guidelines for AI use.5

Different Adoption Rates for Different Sectors

Sector appears to be a determining factor for pace of AI policy adoption. Companies in the communications sector are ahead of the curve with a 54.6% adoption rate, followed by IT at 38.5% (Figure 3). Financials showed moderate responsiveness to evolving requirements around AI, such as the industry-specific EU Digital Operational Resilience Act (DORA), with 24.1% of large European financial institutions adopting formal policies.6

Figure 3. AI Policy Adoption by Sector

Source: Glass Lewis Research. Note: Data as of 2025 proxy season period from Jan. 1 to June 30, 2025.

AI Governance Structure

Among companies that provided disclosure regarding AI governance, structures varied considerably. While a portion of large-cap companies established dedicated AI committees, the majority integrated AI oversight into the responsibilities of existing standard board committees, such as the audit or risk committee. In many cases, the board as a whole is assuming accountability for AI governance oversight.

Regulatory Landscape

The adoption of AI oversight policies by European companies has come ahead of the compliance requirements introduced by the EU AI Act,7 which represents the first comprehensive AI regulation. While the act’s prohibited practices provisions, which carry fines of up to €35 million (US$40.3 million)8 or 7% of global turnover, took effect in February 2025, broader requirements are still being phased in. However, some requirements were recently pared back, with the timeline for implementation delayed as part of an omnibus EU digital simplification package intended to “get rid of regulatory clutter” and promote innovation.9

While DORA has already introduced a regulatory framework for the digital risk management of financial entities, which covers any AI systems they are utilizing, for the most part companies that are taking steps to formalize AI governance practices are doing so voluntarily. This is in response to evolving investor expectations and their own recognition of the business case for addressing associated risks and opportunities.

Investor Expectations

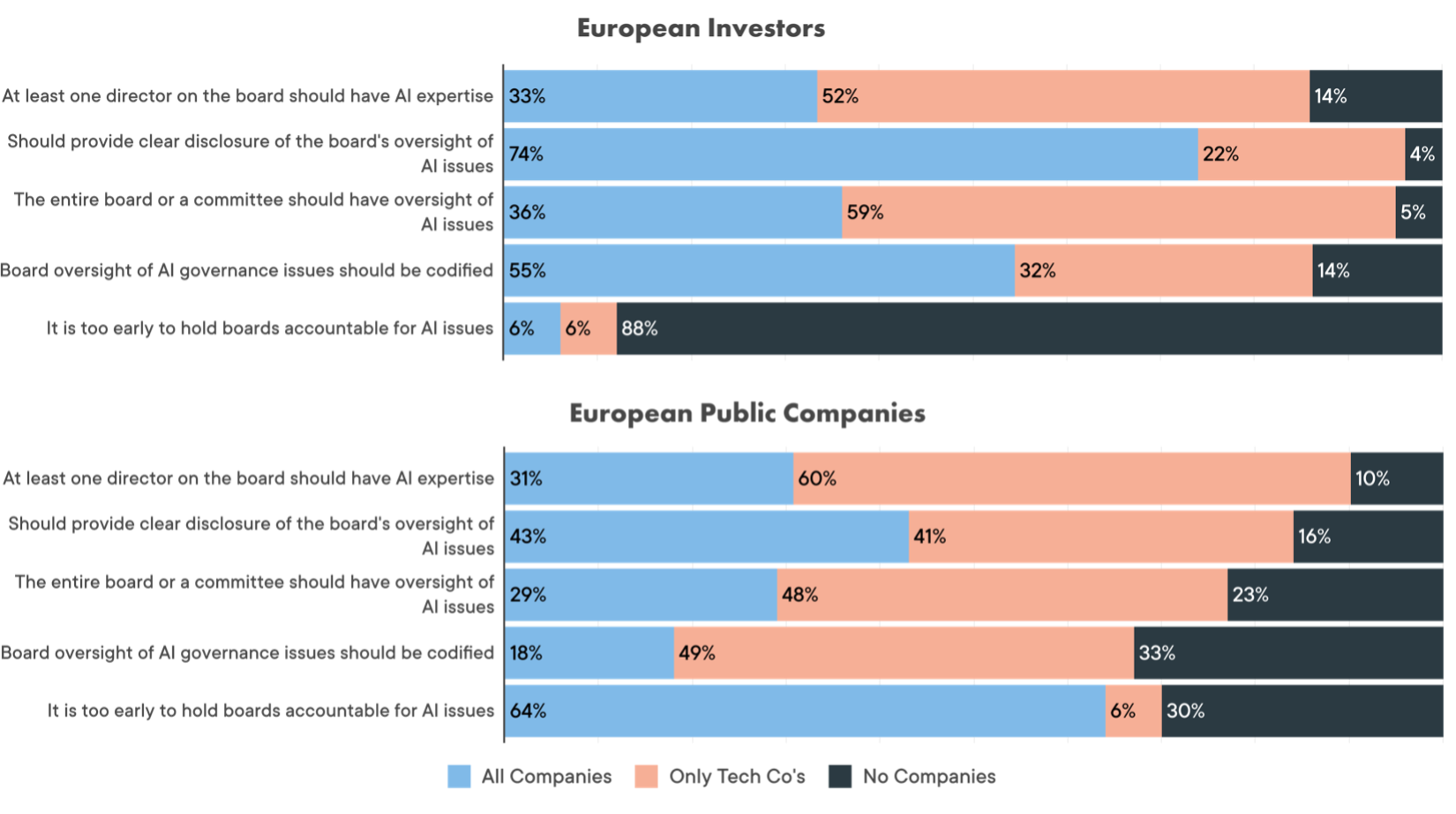

When we asked about AI oversight in our 2024 Policy Survey,10 we found a gap in expectations between investors and issuers. Whereas a majority of European public company respondents felt it was too early to hold the board of any company accountable for AI issues, nearly nine in ten European investors rejected this notion, calling for safeguards to mitigate associated risks, assess the impact of AI on company operations, and ensure the ethical use of AI. In particular, investors reported that they are looking for disclosure regarding the company’s approach to oversight, and its formal codification.

Figure 4. Expectations for AI Governance

Source: Glass Lewis Policy Survey 2024.

Regarding the specific governance structure, most of the investors surveyed do not expect an AI specialist on all boards right now; rather than individual expertise, they emphasized overall board familiarity, placing AI oversight in the broader context of risk management.

“We believe that the board of directors should be accountable for companies’ responsible development and use of AI. Boards play a key role in overseeing that corporate governance and strategy balance competitive deployment of new technology against potential risks. This requires board expertise and resources that are proportionate to the company’s risk exposure and business model. We do not expect individual board directors to have expertise on AI, but rather for the board to have or gather that expertise as a whole.” - Survey Respondent, European Investor

Many public companies agreed, including a European large-cap bank that viewed it as, “Important for risk management, ethical use of Al, implementing and adjustments for regulators.”

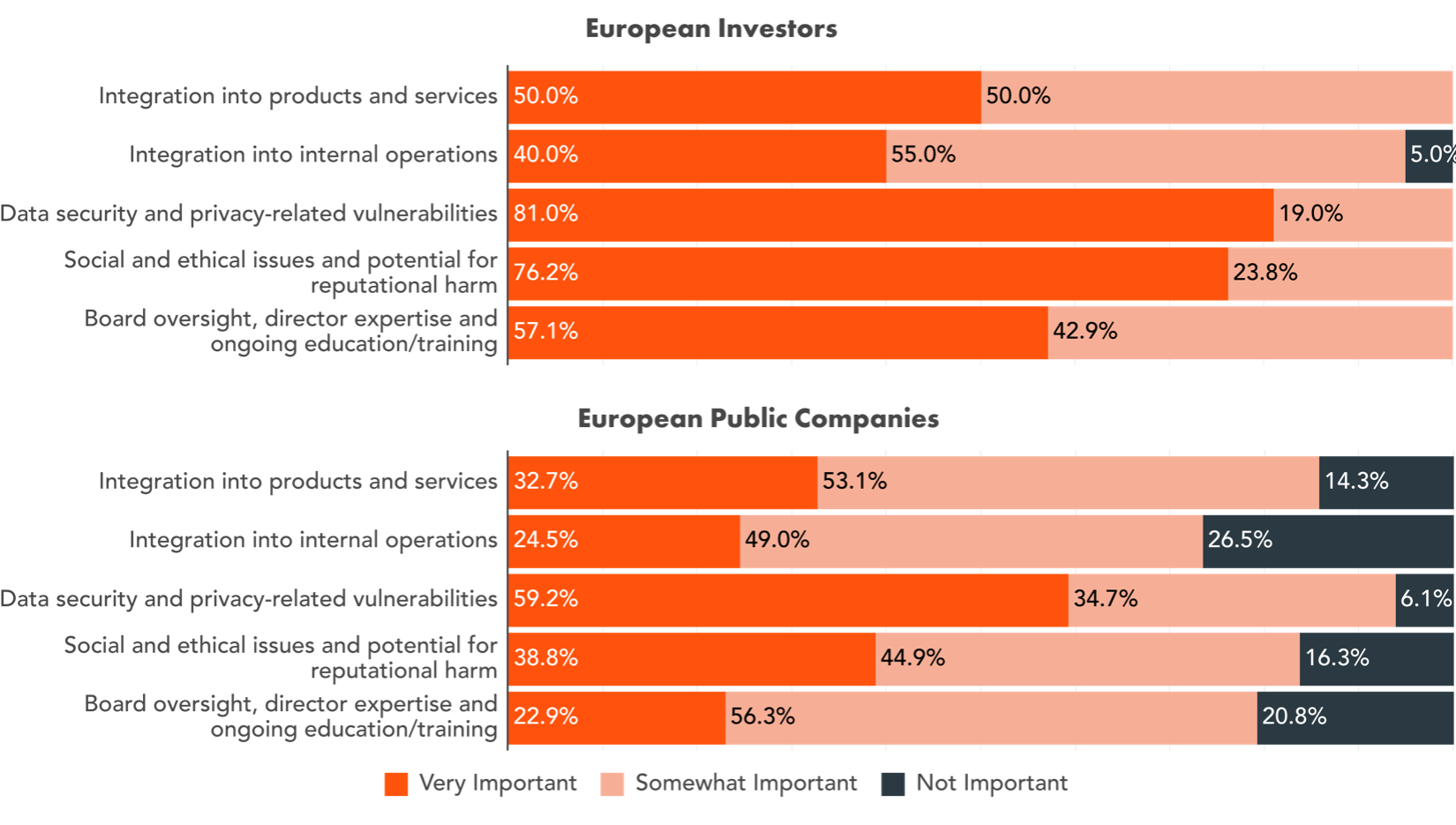

When we asked about specific areas of AI oversight, a majority of investors and issuers alike considered disclosure on AI data security and privacy-related vulnerabilities to be very important. While issuers (38.8%) were much less likely than investors (76.2%) to view social or ethical issues and the potential for reputational harm as very important, these factors received the second-most very important responses among both groups (Figure 5).

Figure 5. Components of AI Risk Assessment Disclosure

Source: Glass Lewis Policy Survey 2024.

Overall, investors were more likely to view all elements of risk assessment disclosure on AI and AI ethics as somewhat or very important. Notably, we observed a geographical split among companies, with European issuers less likely to view these elements as not important (16.8% on average across all five categories) compared to their North American counterparts (30.9%).

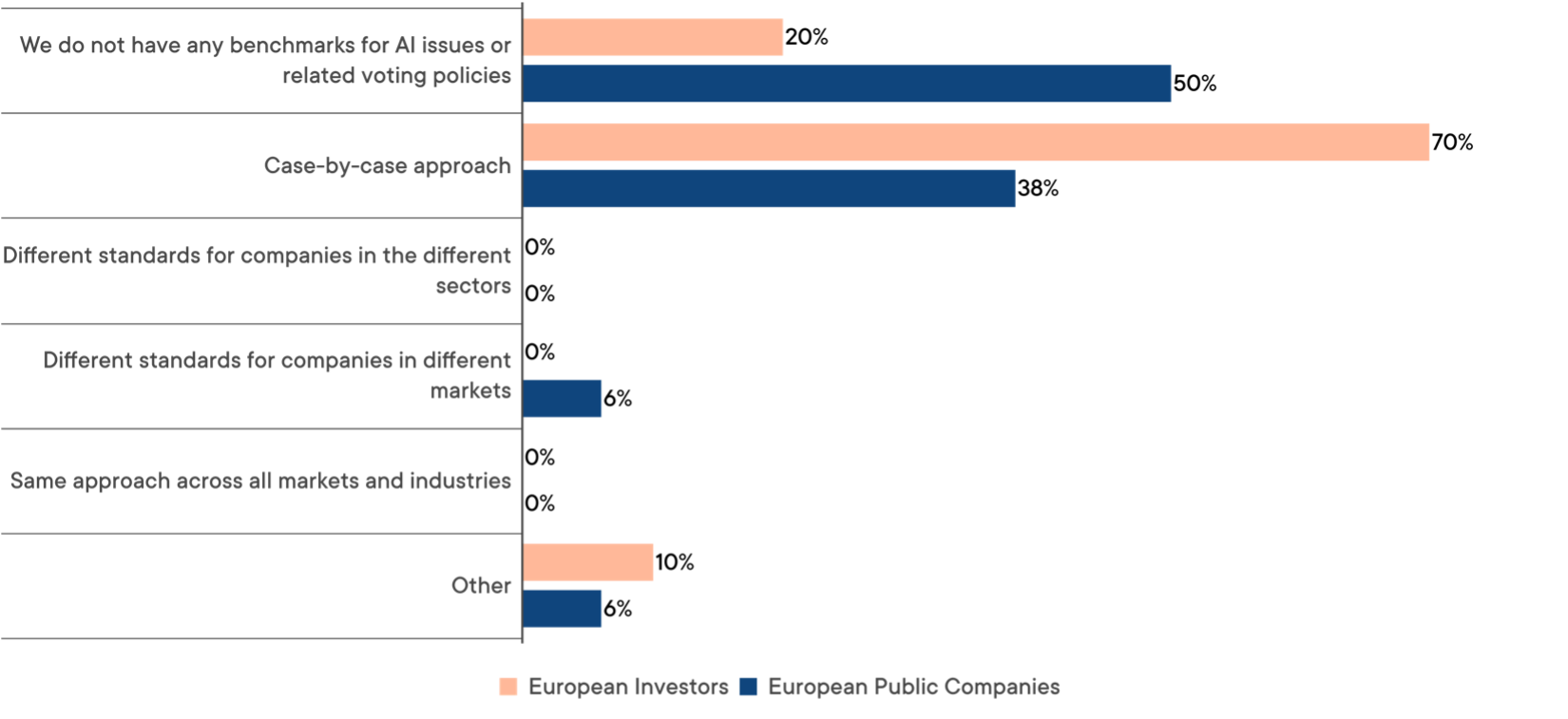

One year on, AI governance remains a work in progress. When we asked about the use of AI benchmarks and standards in our 2025 Policy Survey, the vast majority of both investors and companies reported that they either take a case-by-case approach, or have not developed any standard benchmarks.11

Figure 6. Use of AI Benchmarks and Standards

Source: Glass Lewis 2025 Policy Survey.

“Our sense is that it is too early to settle on set frameworks. However, we do have recurring expectations: if a company is developing AI, that they have robust governance in place appropriate for an emergent technology. If they are deploying AI, that they incorporate AI related considerations into existing governance structures while also being mindful of the potential need for new governance arrangements for novel technology use cases.” – Survey Respondent, U.K. Investor

Conclusion

The current efforts to address risks and opportunities around applications of AI suggest that European companies are preparing for the expanded use of AI, as well as evolving shareholder and wider stakeholder expectations around disclosure of AI risks and oversight. These trends are likely to accelerate as deadlines approach under the EU AI Act.

Notes and References

- All subsequent references to Europe and European denote only those in the Continent.

- For in-depth details, see Glass Lewis. 2025 Continental Europe Proxy Season Review. https://grow.glasslewis.com/2025-proxy-season-preview-continental-europe.

- Unicredit. Annual Report and Accounts. 2024. Page 309. https://www.unicreditgroup.eu/content/dam/unicreditgroup-eu/documents/en/investors/financial-reports/2024/4Q24/2024-Annual-Reports-and-Accounts-General-Meeting-Draft.pdf.

- Poste Italiane. Annual Report 2024. Page 21. https://www.posteitaliane.it/files/1476637295199/Annual-Report-2024-ENG.pdf.

- E.ON Press Release. “E.ON sets standards for the responsible use of artificial intelligence.” January 15, 2025. Accessed November 25, 2025. https://www.eon.com/en/about-us/media/press-release/2025/eon-sets-standards-for-the-responsible-use-of-artificial-intelligence.html.

- The EU Digital Operational Resilience Act. "DORA | Final Text". Accessed December 9, 2025. https://www.digital-operational-resilience-act.com/DORA_Articles.html

- The EU Artificial Intelligence Act. “Up-to-date developments and analyses of the EU AI Act.” Accessed Nov. 1, 2025. https://artificialintelligenceact.eu/

- As of Nov. 25, 2025.

- European Commission. Press Release. “Simpler EU digital rules and new digital wallets to save billions for businesses and boost innovation”. Accessed Nov. 20, 2025. https://ec.europa.eu/commission/presscorner/detail/en/ip_25_2718.

- Glass Lewis. Policy Survey 2024: Results & Key Findings. https://grow.glasslewis.com/2024-policy-survey-results

- Glass Lewis. 2025 Policy Survey Results & Key Findings. https://grow.glasslewis.com/2025-policy-survey-results.

.png)